Research (Under construction)

Medical Interpreter Robot

Non-verbal cues play a vital role in communication between healthcare stakeholders (doctors, patients) who speak different native languages. This project explores important gestures in healthcare settings and how humanoid robots can learn those gestures while interacting with human.

Human-LLM Collaboration in Qualitative Data Analysis

Qualitative data analysis is labor-intensive. This research explores how AI, particularly LLMs, could collaborate with researchers to improve the quality and the efficiency of the qualitative data analysis process.

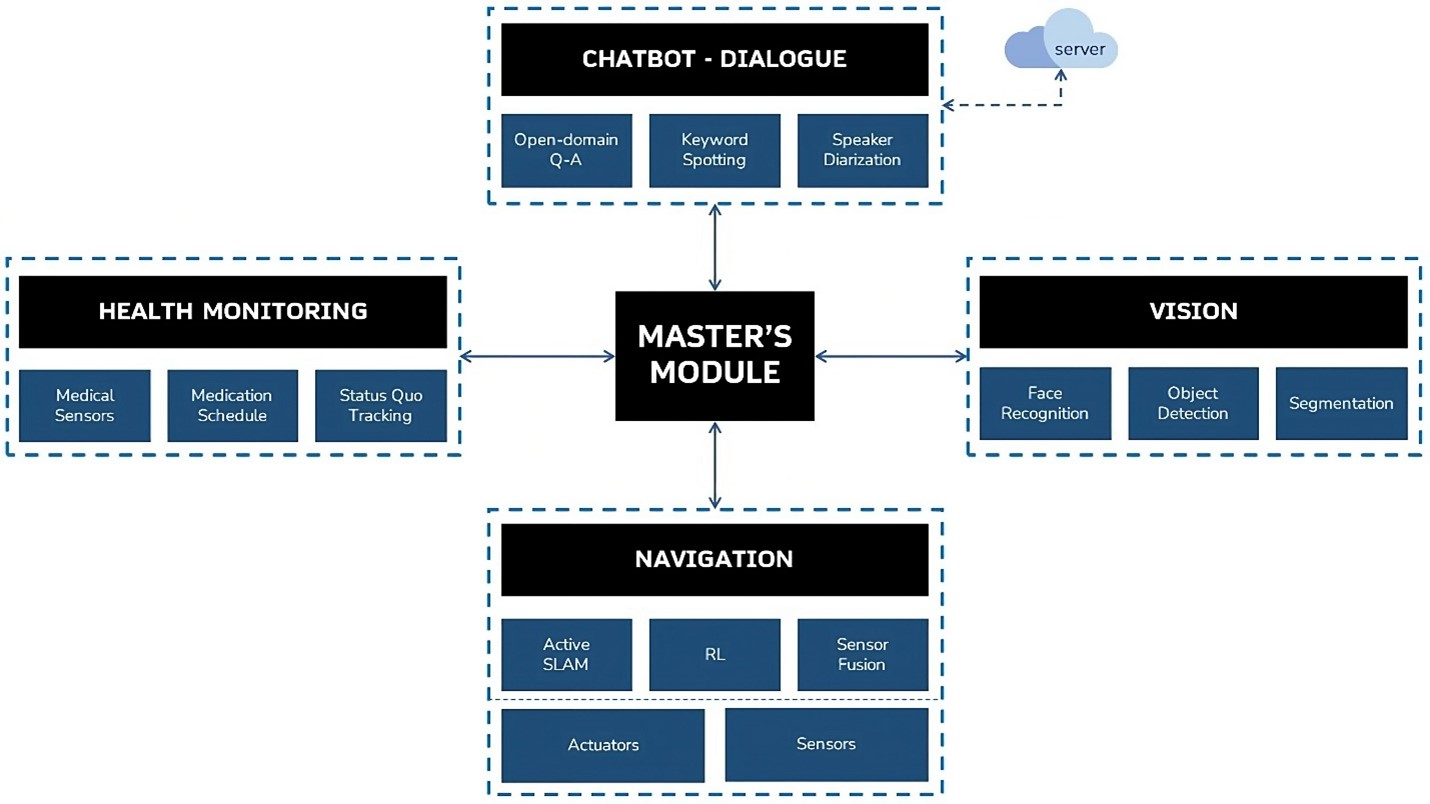

MedBot

Since Aug 2022, I am the mentor for the first undergraduate intelligent robot research team at VinUniversity.

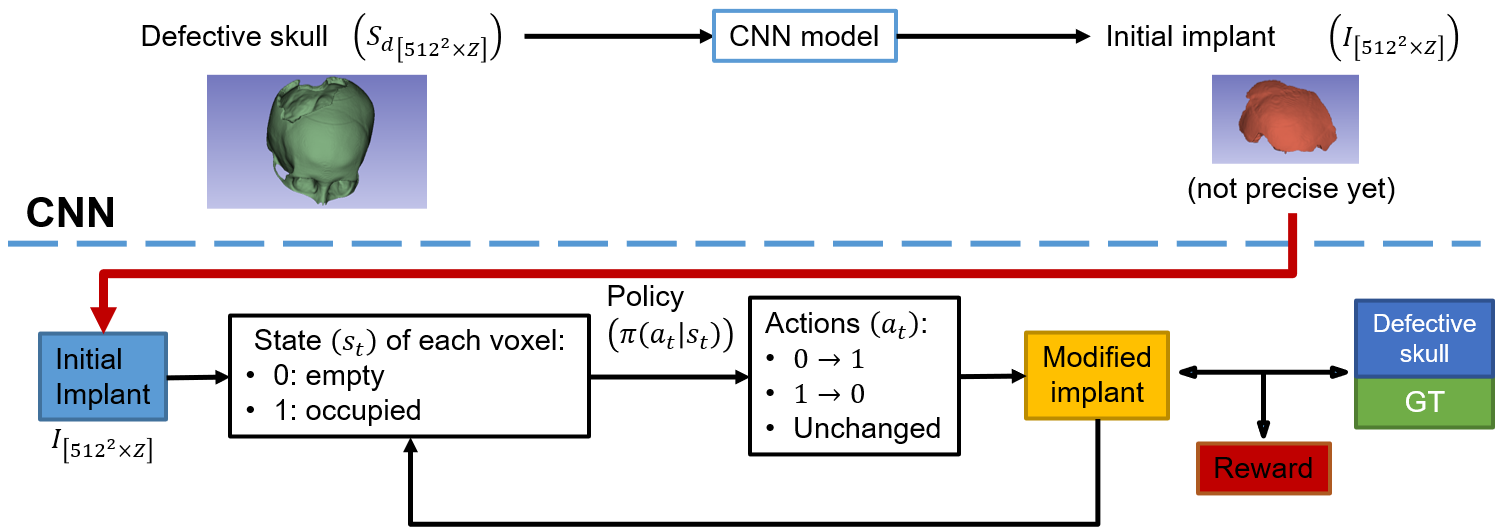

Automatic cranial implant design with multi-agent reinforcement learning

PI: Dr. Huy-Hieu Pham, Prof. Minh Do

I proposed a solution to use reinforcement learning to refine cranial implant designs for varied real-world cases.

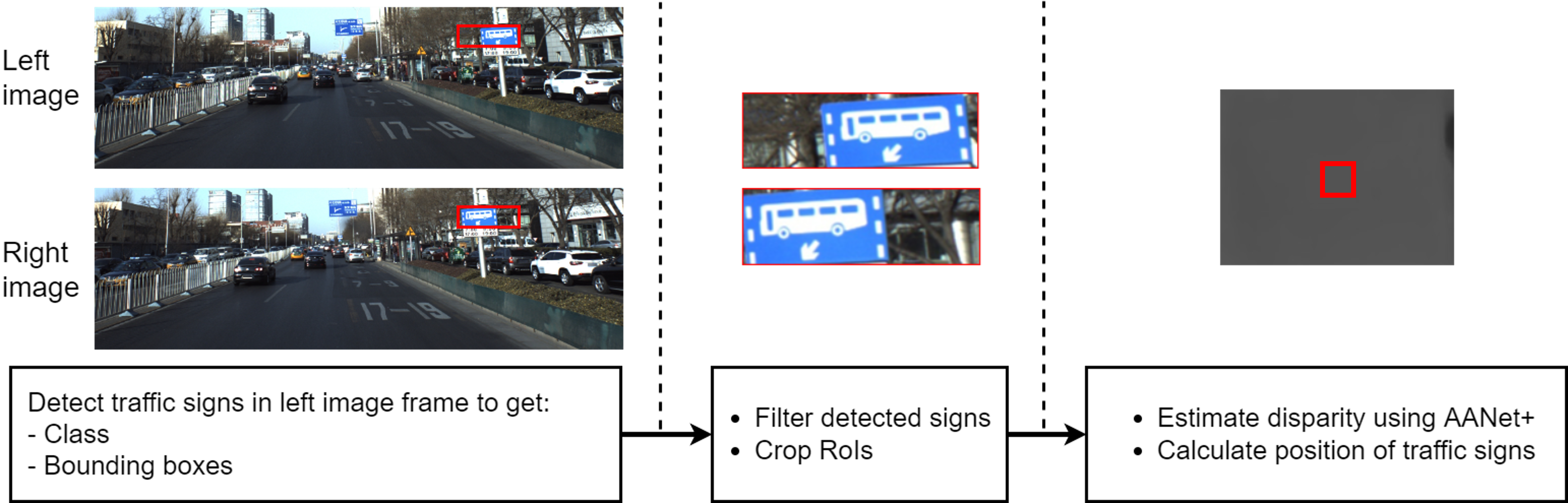

Light weight Visual SLAM for autonomous vehicles based on GraphSLAM and Deep Learning

I proposed a new SLAM system that use an object detection model and a stereo depth estimation model for the frontend process. GraphSLAM was used to avoid redundancy and loop-closing. The solution is expected to improve the frontend process in both accuracy and computational effiency.

AIR-HUST

AIR-HUST is an autonomous intelligent service mobile robot developed by the Autonomous Intelligent Robotics Lab at HUST. I was the leader of the undergraduates in this project. The robot uses computer vision deep learning models for fire warning and medical object detection.

CBot

PI: Dr. Xuan-Ha Nguyen and Assoc. Prof. Chan-Hung Nguyen

CBot is an intelligent service mobile robot based on Turtlebot2 at CMC Corporation. I developed the software system of the robot. In this project, I also did research on 2D SLAM for indoor robots.